Chaining dma design example, Chaining dma – Altera IP Compiler for PCI Express User Manual

Page 238

15–6

Chapter 15: Testbench and Design Example

Chaining DMA Design Example

IP Compiler for PCI Express User Guide

August 2014

Altera Corporation

The testbench has several Verilog HDL parameters that control the overall operation

of the testbench. These parameters are described in

.

Chaining DMA Design Example

This design example shows how to create a chaining DMA native endpoint which

supports simultaneous DMA read and write transactions. The write DMA module

implements write operations from the endpoint memory to the root complex (RC)

memory. The read DMA implements read operations from the RC memory to the

endpoint memory.

When operating on a hardware platform, the DMA is typically controlled by a

software application running on the root complex processor. In simulation, the

testbench generated by the IP Compiler for PCI Express, along with this design

example, provides a BFM driver module in Verilog HDL or VHDL that controls the

DMA operations. Because the example relies on no other hardware interface than the

PCI Express link, you can use the design example for the initial hardware validation

of your system.

The design example includes the following two main components:

■

The IP core variation

■

An application layer design example

Both components are automatically generated along with a testbench. All of the

components are generated in the language (Verilog HDL or VHDL) that you selected

for the variation file.

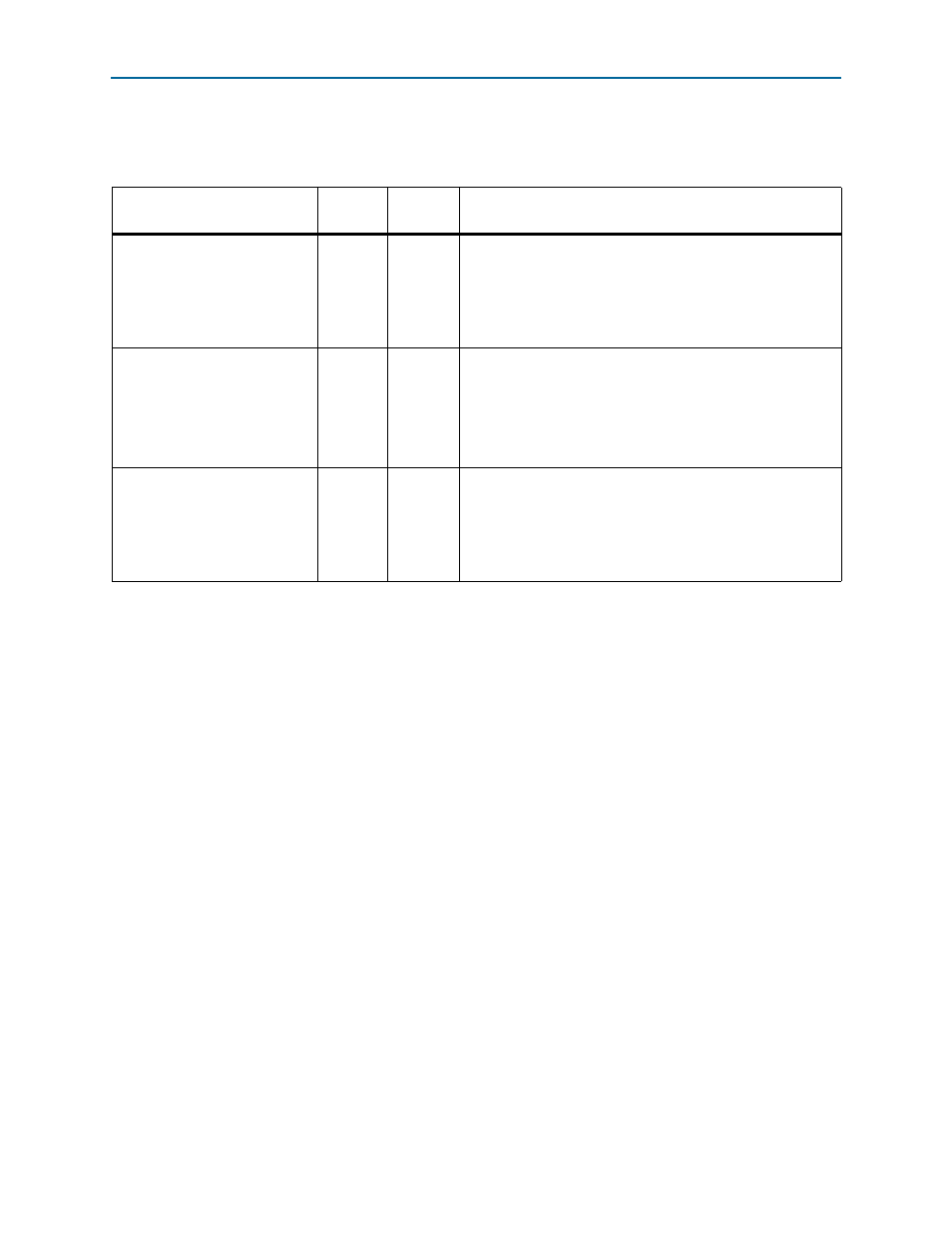

Table 15–3. Testbench Verilog HDL Parameters for the Root Port Testbench

Parameter

Allowed

Values

Default

Value

Description

PIPE_MODE_SIM

0 or 1

1

Selects the PIPE interface (PIPE_MODE_SIM=1) or the serial

interface (PIPE_MODE_SIM= 0) for the simulation. The PIPE

interface typically simulates much faster than the serial

interface. If the variation name file only implements the PIPE

interface, then setting PIPE_MODE_SIM to 0 has no effect and

the PIPE interface is always used.

NUM_CONNECTED_LANES

1,2,4,8

8

Controls how many lanes are interconnected by the testbench.

Setting this generic value to a lower number simulates the

endpoint operating on a narrower PCI Express interface than

the maximum.

If your variation only implements the ×1 IP core, then this

setting has no effect and only one lane is used.

FAST_COUNTERS

0 or 1

1

Setting this parameter to a 1 speeds up simulation by making

many of the timing counters in the IP Compiler for PCI Express

operate faster than specified in the PCI Express

specification.This parameter should usually be set to 1, but can

be set to 0 if there is a need to simulate the true time-out

values.