HP MPX200 Multifunction Router User Manual

Page 24

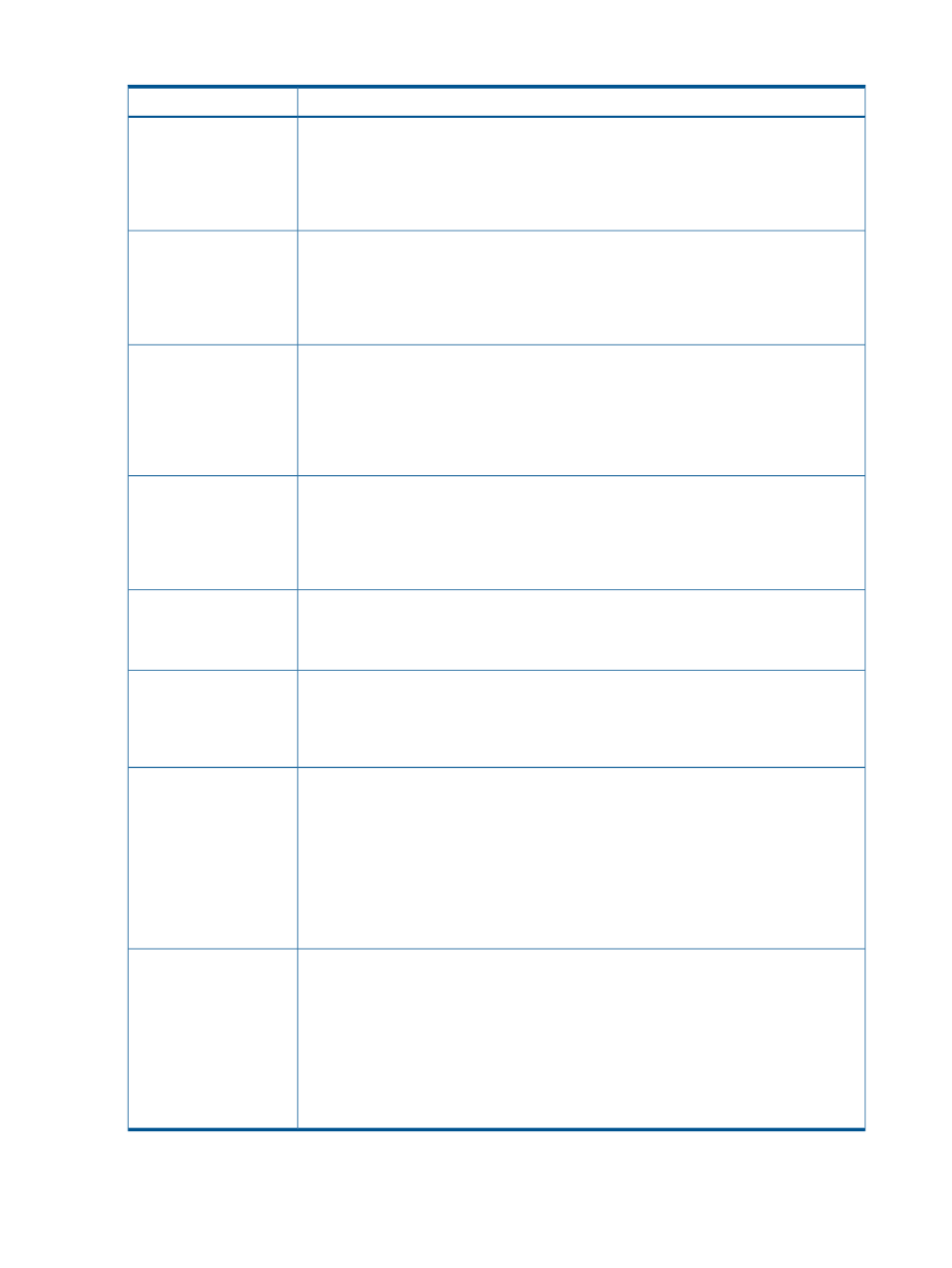

Table 5 MPX200 switch capability

Description

Switch capability

The 1 GbE iSCSI blade of the MPX200 comes with four copper GbE ports (802.3ab). To

take advantage of full duplex gigabit capabilities, you need infrastructure of Cat5e or Cat6

1 and 10 Gigabit

Ethernet support

cabling. The 10-GbE iSCSI/FCoE blade of the MPX200 comes with two SFP+ 10 Gb ports.

You can configure either SFP+ optical or SFP+ copper connectivity. Server connections and

switch interconnects can be done via SFP+ fiber cabling, in addition to Cat5e or Cat6

cabling, depending on IP switch capabilities.

For optimal switch performance, HP recommends that the switch have at least 512 KB of

buffer cache per port. Consult your switch manufacturer specifications for the total buffer

Fully subscribed

non-blocking

cache. For example, if the switch has 48 Gb ports. You should have at least 24 MB of

backplanesor Adequate

per-port buffer cache

buffer cache dedicated to those ports. If the switch aggregates cache among a group of

ports (that is, 1MB of cache per 8 ports) space your utilized ports appropriately to avoid

cache oversubscription.

IP storage networks are unique in the amount of sustained bandwidth that is required to

maintain adequate performance levels under heavy workloads. You should enable Gigabit

Flow Control support

Ethernet Flow Control (802.3x) technology on the switch to eliminate receive and/or transmit

buffer cache pressure. Note: Some switch manufacturers do not recommend configuring

Flow Control when using Jumbo Frames, or vice versa. Consult the switch manufacturer

documentation. HP recommends implementing Flow Control over Jumbo Frames for optimal

performance. Flow control is required when using the HP DSM and MPIO.

All ports on the switch, servers, and storage nodes should be configured to auto-negotiate

duplex and speed settings. Although most switches and NICs will auto negotiate the optimal

Individual port speed and

duplex setting

performance setting, if a single port on the IP storage network negotiates a suboptimal

(100 megabit or less and/or half-duplex) setting, the entire SAN performance can be

impacted negatively. Check each switch and NIC port to make sure that the auto-negotiation

is resolved to be 1000Mb/s or 10Gb/s with full-duplex.

It is important to enable Link Aggregation and/or Trunking support when building a high

performance fault-tolerant IP storage network. HP recommends implementing Link

Link

Aggregation/Trunking

support

Aggregation and/or Trunking technology when doing Switch to Switch Trunking, Server

NIC Load Balancing and Server NIC Link Aggregation (802.3ad).

Implementing a separate subnet or VLAN for the IP storage network is a best IP-SAN practice.

If implementing VLAN technology within the switch infrastructure, typically you need to

VLAN support

enable VLAN Tagging (802.1q) and/or VLAN Trunking (802.1q or InterSwitch Link [ISL]

from Cisco). Consult your switch manufacturer configuration guidelines when enabling

VLAN support.

In order to build a fault-tolerant IP storage network, you need to connect multiple switches

into a single Layer 2 (OSI Model) broadcast domain using multiple interconnects. In order

Spanning Tree/Rapid

Spanning Tree

to avoid Layer 2 loops, you must implement the Spanning Tree protocol (802.1D) or Rapid

Spanning Tree protocol (802.1w) in the switch infrastructure. Failing to do so can cause

numerous issues on the IP storage networks including performance degradation or even

traffic storms. HP recommends implementing Rapid Spanning Tree if the switch infrastructure

supports it for faster Spanning Tree convergence. If the switch is capable, consider disabling

spanning tree on the server switch ports so that they do not participate in the spanning tree

convergence protocol timings. Note: You should configure FCoE with spanning-tree disabled

at the first level server edge switch.

Sequential read and write, or streaming workloads can benefit from a maximum frame

size larger than 1514 bytes. The 1 GbE iSCSI and 10 GbE iSCSI/FCoE ports are capable

Jumbo Frames support

of frame sizes up to 9K bytes. Better performance is realized when the NICs and iSCSI

initiators are configured for 4K byte (maximum frame size of 4088 bytes) jumbo frames.

You must enable Jumbo frames on the switch, the 1 GbE iSCSI and 10 GbE iSCSI/FCoE

modules, and all servers connected to the IP-SAN. Typically, you enable Jumbo Frames

globally on the switch or per VLAN and on a per port basis on the server. Note: Some

switch manufacturers do not recommend configuring Jumbo Frames when using Flow

Control, or vice versa. Consult the switch manufacturer documentation. HP recommends

implementing Flow Control over Jumbo Frames for optimal performance.

24

Planning the MPX200 installation