14 lsf-hpc equivalents of slurm srun options, Lsf-hpc equivalents of slurm srun options – HP XC System 3.x Software User Manual

Page 99

$ export SLURM_JOBID=150

$ export SLURM_NPROCS=4

$ mpirun -tv srun additional parameters as needed

After you finish with this interactive allocation, exit the /bin/bash process in the first terminal; this ends

the LSF job.

Note:

If you exported any variables, such as SLURM_JOBID and SLURM_NPROCS, be sure to unset them as

follows before submitting any further jobs from the second terminal:

$ unset SLURM_JOBID

$ unset SLURM_NPROCS

You do not need to launch the /bin/bash shell to be able to interact with any compute node resources;

any running job will suffice. This is excellent for checking on long-running jobs. For example, if you had

submitted a CPU-intensive job, you could execute the uptime command on all nodes in the allocation to

confirm an expected high load on the nodes. The following is an example of this; the LSF JOBID is 200 and

the SLURM JOBID is 250:

$ srun --jobid=250 uptime

If you are concerned about allocating the resources too long or leaving them allocated long after you

finished using them, you could submit a simple sleep job to limit the allocation time, as follows:

$ bsub -n4 -ext "SLURM[nodes=4]" -o %J.out sleep 300

Job <125> is submitted to the default queue

After you confirm that this job is running using the bjobs -l 125 command, you can operate interactively

for with the resources. If LSF-HPC observes no SLURM activity within the allocation after 5 minutes, it

terminates the job. Any existing SLURM activity (including running MPI jobs with the mpirun -srun

command) is allowed to continue.

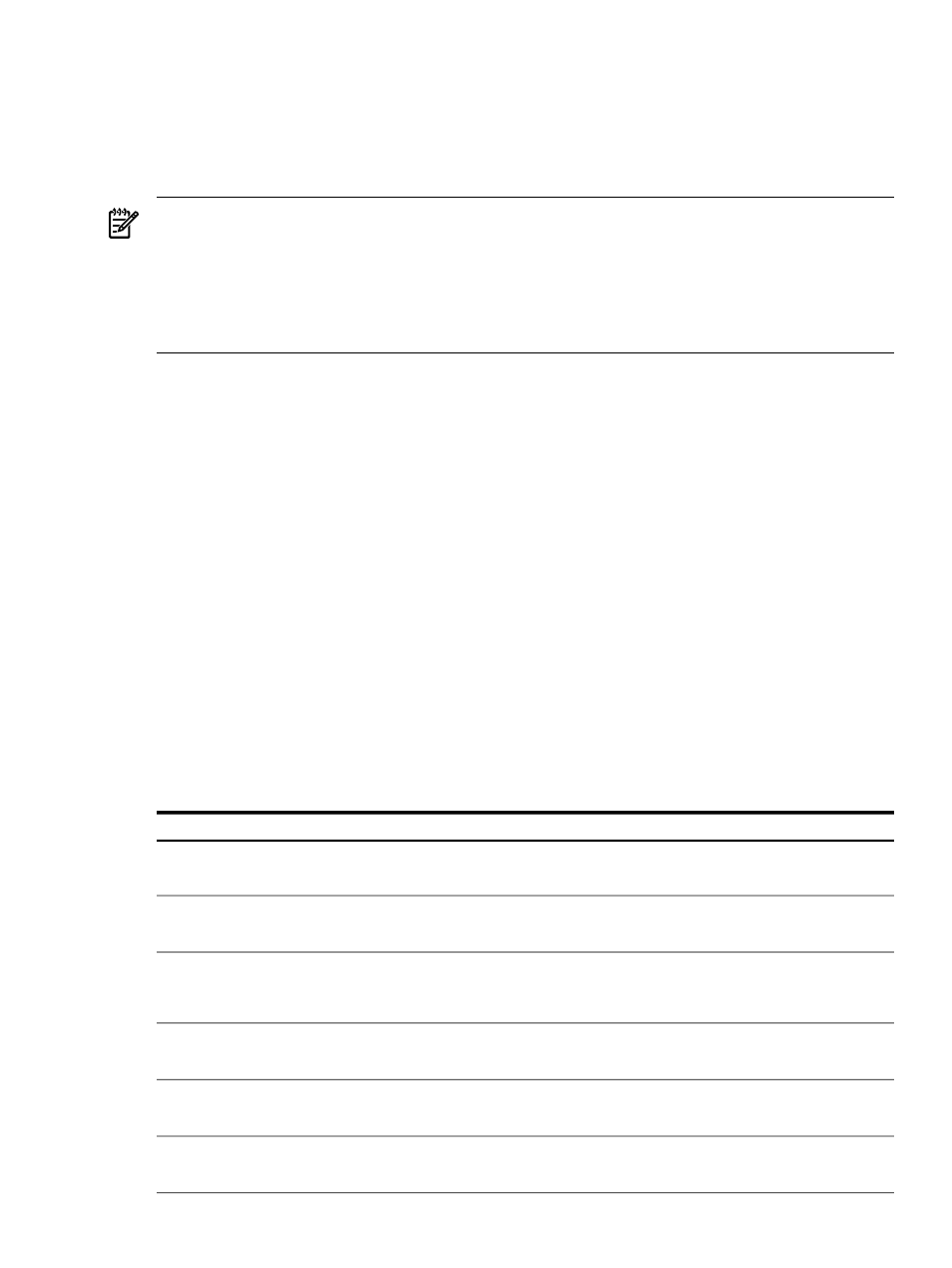

10.14 LSF-HPC Equivalents of SLURM srun Options

describes the srun options and lists their LSF-HPC equivalents.

Table 10-2 LSF-HPC Equivalents of SLURM srun Options

LSF-HPC Equivalent

Description

srun

Option

bsub -n num

Number of processes (tasks) to run.

-n

--ntasks=ntasks

HP XC does not provide this option because the

meaning of this option can be covered by bsub -n

and mincpus=n.

Specifies the number of cores per task. Min

processors per node = MAX(ncpus,

mincpus)

-c

--processors-per-task=ntasks

-ext "SLURM[nodes=minmax]"

where minmax is min[-max]

Specifies the minimum and, optionally,

maximum number of nodes allocated to

job. The job allocation will contain at least

the minimum number of nodes.

-N

--nodes=min[-max]

-ext "SLURM[mincpus=n]"

Specifies the minimum number of cores per

node. Min processors per node = MAX(-c

ncpus, --mincpus=n). Default value is 1.

--mincpus=n

-ext "SLURM[mem=MB]"

Specifies a minimum amount of real

memory of each node. By default, job does

not require -ext.

--mem=MB

-ext "SLURM[tmp=MB]"

Specifies a minimum amount of temporary

disk space of each node. By default, job does

not require -ext.

--tmp=MB

10.14 LSF-HPC Equivalents of SLURM srun Options

99