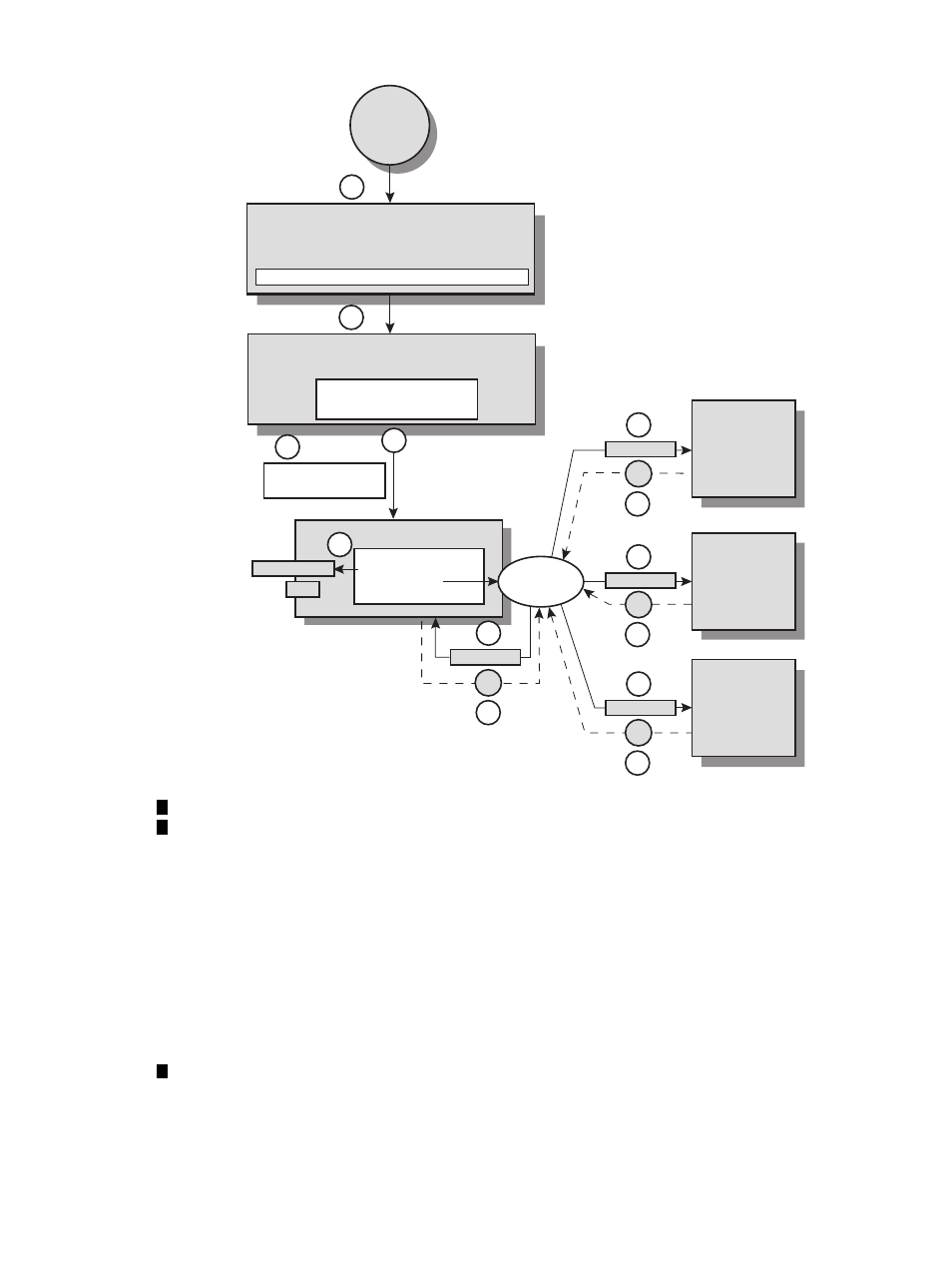

How lsf-hpc and slurm launch and manage a job, Figure 10-1, User – HP XC System 3.x Software User Manual

Page 89

Figure 10-1 How LSF-HPC and SLURM Launch and Manage a Job

N 1 6

N16

User

1

2

4

6

6

6

6

7

7

7

7

5

job_starter.sh

$ srun -nl

myscript

Login node

$ bsub-n4 -ext ”SLURM[nodes-4]” -o output.out./myscript

LSF Execution Host

lsfhost.localdomain

SLURM_JOBID=53

SLURM_NPROCS=4

$ hostname

hostname

$ hostname

n1

n1

hostname

hostname

hostname

Compute Node

N2

Compute Node

N3

Compute Node

N4

n2

n3

n4

N16

Compute Node

srun

N1

myscript

$ srun hostname

$ mpirun -srun ./hellompi

3

1

A user logs in to login node n16.

2

The user executes the following LSF bsub command on login node n16:

$ bsub -n4 -ext "SLURM[nodes=4]" -o output.out ./myscript

This bsub command launches a request for four cores (from the -n4 option of the bsub command)

across four nodes (from the -ext "SLURM[nodes=4]" option); the job is launched on those cores.

The script, myscript, which is shown here, runs the job:

#!/bin/sh

hostname

srun hostname

mpirun -srun ./hellompi

3

LSF-HPC schedules the job and monitors the state of the resources (

s) in the SLURM

lsf

partition. When the LSF-HPC scheduler determines that the required resources are available,

LSF-HPC allocates those resources in SLURM and obtains a SLURM job identifier (jobID) that

corresponds to the allocation.

In this example, four cores spread over four nodes (n1,n2,n3,n4) are allocated for myscript, and

the SLURM job id of 53 is assigned to the allocation.

10.8 How LSF-HPC and SLURM Launch and Manage a Job

89