A.6 random reads, Random read rate – HP StorageWorks Scalable File Share User Manual

Page 53

Another way to measure throughput is to only average over the time while all the clients are

active. This is represented by the taller, narrower box in

. Throughput calculated this

way shows the system's capability, and the stragglers are ignored.

This alternate calculation method is sometimes called "stonewalling". It is accomplished in a

number of ways. The test run is stopped as soon as the fastest client finishes. (IOzone does this

by default.) Or, each process is run for a fixed amount of time rather than a fixed volume of data.

(IOR has an option to do this.) If detailed performance data is captured for each client with good

time resolution, the stonewalling can be done numerically by only calculating the average up to

the time the first client finishes.

NOTE:

The results shown in this report do not rely on stonewalling. We did the numerical

calculation on a sample of test runs and found that stonewalling increased the numbers by

roughly 10% in many cases.

Neither calculation is better than the other. They each show different things about the system.

However, it is important when comparing results from different studies to know whether

stonewalling was used, and how much it affects the results. IOzone uses stonewalling by default,

but has an option to turn it off. IOR does not use stonewalling by default, but has an option to

turn it on.

A.6 Random Reads

HP SFS with Lustre is optimized for large sequential transfers, with aggressive read-ahead and

write-behind buffering in the clients. Nevertheless, certain applications rely on small random

reads, so understanding the performance with small random I/O is important.

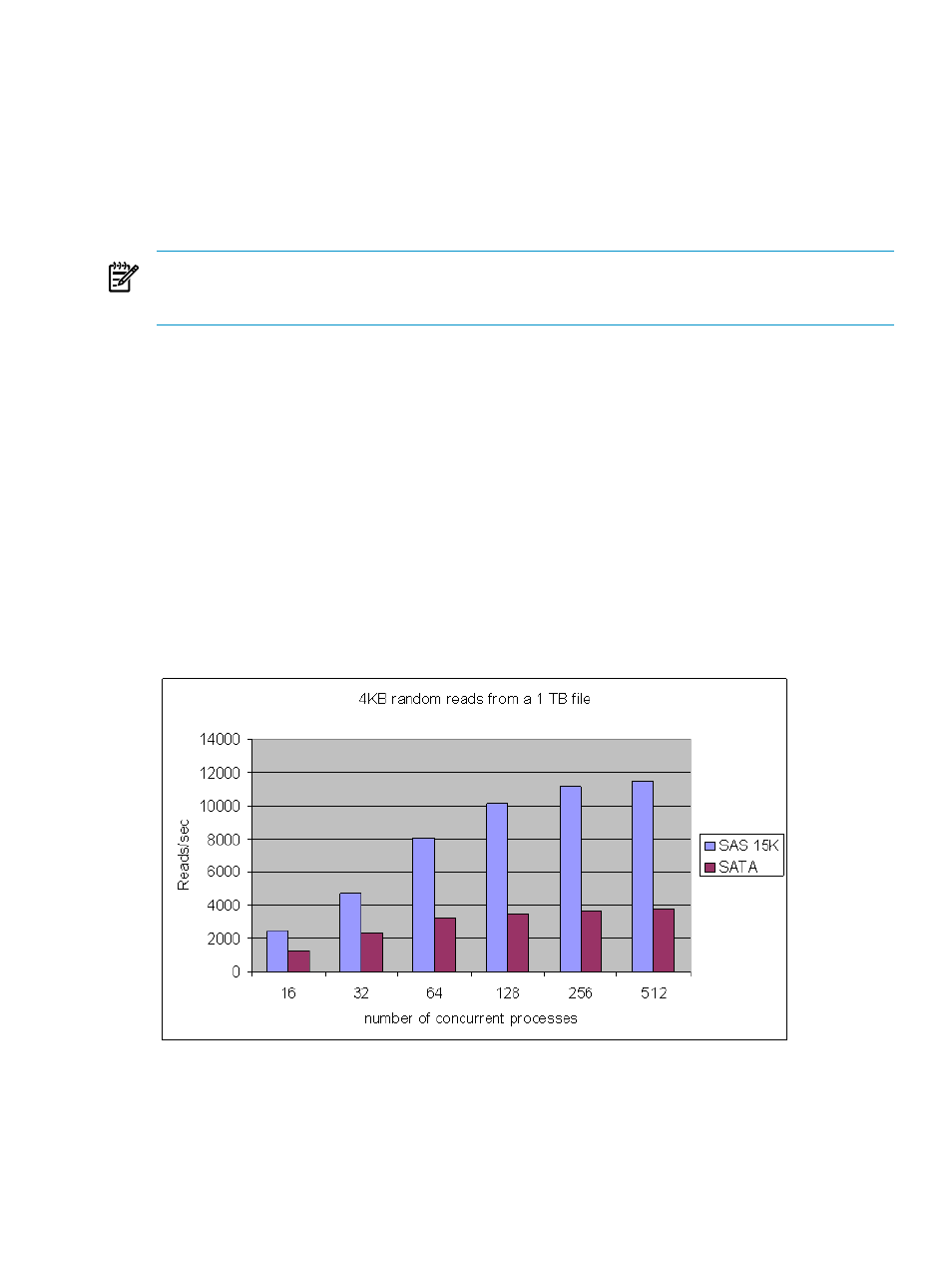

compares random read performance of SFS G3.0-0 using 15Krpm SAS drives and

7.2Krpm SATA drives. Each client node ran from 1 to 32 processes (from 16 to 512 concurrent

processes in all). All the processes performed page-aligned 4KB random reads from a single 1

TB file striped over all 16 OSTs.

Figure A-9 Random Read Rate

For 16 concurrent reads, one per client node, the read rate per second with 15K SAS drives is

roughly twice that with SATA drives. This difference reflects the difference in mechanical access

time for the two types of disks. For higher levels of concurrency, the difference is even greater.

SAS drives are able to accept a number of overlapped requests and perform an optimized elevator

sort on the queue of requests.

A.6 Random Reads

53