2 communication performance test environment – Intel AS/400 RISC Server User Manual

Page 66

Notes:

1.

Unshielded Twisted Pair (UTP) card; uses copper wire cabling

2.

Uses fiber optics

3.

Custom Card Identification Number and System i Feature Code

4.

Virtual Ethernet enables you to establish communication via TCP/IP between logical partitions and can be used without

any additional hardware or software.

5.

Depends on the hardware of the system.

6.

These are theoretical hardware unidirectional speeds

7.

Each port can handle 1000 Mbps

8.

Blade communicates with the VIOS Partition via Virtual Ethernet

9.

Host Ethernet Adapter for IBM Power 550, 9409-M50 running IBM i Operating System

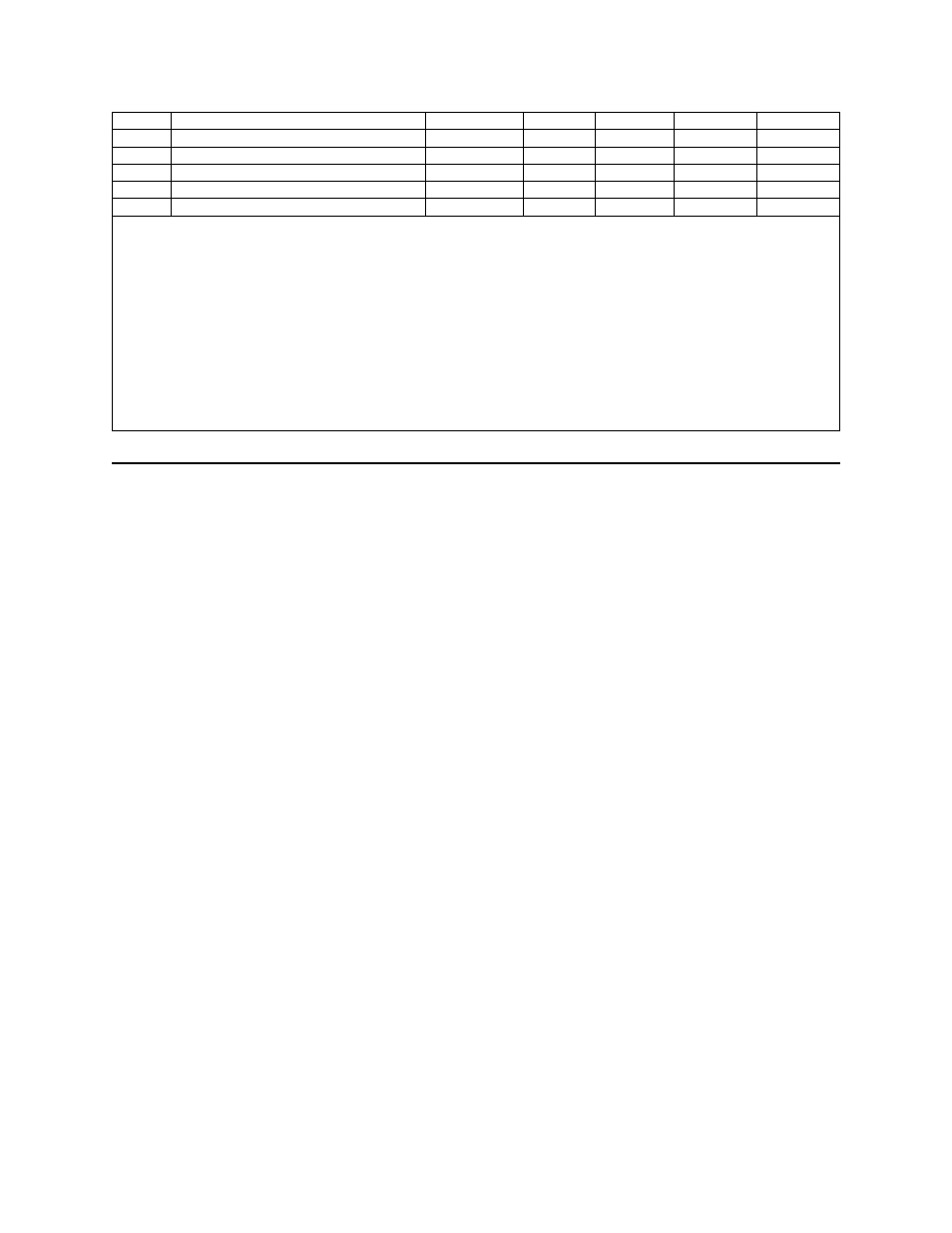

y

All adapters support Auto-negotiation

Yes

Yes

N/A

Yes

n/a

5

Blade

8

N/A

No

Yes

N/A

Yes

n/a

5

Virtual Ethernet

4

N/A

Yes

Yes

Yes

Yes

10 / 100 / 1000

IBM 4-Port 10/100/1000 Base-TX PCI-e

7,9

1819

1

Yes

Yes

Yes

Yes

10 / 100 / 1000

IBM 4-Port 10/100/1000 Base-TX PCI-e

7

181C

1

Yes

Yes

Yes

Yes

10000

IBM 2-Port Gigabit Base-SX PCI-e

181B

2

Yes

Yes

Yes

Yes

10 / 100 / 1000

IBM 2-Port 10/100/1000 Base-TX PCI-e

7

181A

1

5.2 Communication Performance Test Environment

Hardware

All PCI-X measurements for 100 Mbps and 1 Gigabit were completed on an IBM System i 570+ 8-Way

(2.2 GHz). Each system is configured as an LPAR, and each communication test was performed between

two partitions on the same system with one dedicated CPU. The gigabit IOAs were installed in a

133MHz PCI-X slot.

The measurements for 10 Gigabit were completed on two IBM System i 520+ 2-Way (1.9 GHz) servers.

Each System i server is configured as a single LPAR system with one dedicated CPU. Each

communication test was performed between the two systems and the 10 Gigabit IOAs were installed in

the 266 MHz PCI-X DDR(double data rate) slot for maximum performance. Only the 10 Gigabit Short

Reach (573A) IOA’s were used in our test environment.

All PCI-e measurements were completed on an IBM System i 9406-MMA 7061 16 way or IBM Power

550, 9409-M50. Each system is configured as an LPAR, and each communication test was performed

between two partitions on the same system with one dedicated CPU. The Gigabit IOA's where installed in

a PCI-e 8x slot.

All Blade Center measurements where collected on a

4 processor 7998-61X Blade in a Blade Center

H chassis, 32 GB of memory

. The AIX partition running the VIOS server was not limited. All

performance data was collect with the Blade running as the server. The System i partition (on the Blade)

was limited to 1 CPU with 4 GB of memory and communicated with an external IBM System i 570+

8-Way (2.2 GHz) configured as a single LPAR system with one dedicated CPU and 4 GB of Memory.

Software

The NetPerf and Netop workloads are primitive-level function workloads used to explore

communications performance. Workloads consist of programs that run between a System i client and a

System i server, Multiple instances of the workloads can be executed over multiple connections to

increase the system load. The programs communicate with each other using sockets or SSL APIs.

IBM i 6.1 Performance Capabilities Reference - January/April/October 2008

©

Copyright IBM Corp. 2008

Chapter 5 - Communications Performance

66