White paper – QLogic 10000 Series Mt. Rainier Technology Accelerates the Enterprise User Manual

Page 7

SSG-WP12004C

SN0430914-00 rev. C 11/12

7

White PaPer

cache coherence, enables adoption of server-based SSD caching as a

standard configuration. Because the caches are server-based, with proper

configuration they can take advantage of the abundant available bandwidth

at the edges of storage networks, allowing them to avoid the primary

sources of network latency: congestion and resource contention.

Managing Mt. Rainier Clusters

all Mt. rainier configuration, management, and data collection

communication takes place in-band on the existing storage network; no

additional connectivity or configuration is required. QLogic provides a Web

console, as well as scriptable command line and server virtualization plug-

in management tools.

Common management tasks, such as creating a Mt. rainier cluster,

adding or removing cluster members, configuring cache parameters,

and configuring SSD data LUNs are easily accomplished by running the

appropriate wizards within the QLogic QConvergeConsole

®

(QCC) or an

environment-specific plug-in. Optionally, the scriptable host command line

interface (hCLi) may also be used to perform any operation.

Standard management tools provide advanced operations to enable cluster

maintenance while maintaining continuous availability. For instance, if a

server with a cluster member needs to be taken down for maintenance,

all of its SSD data LUNs, SaN LUN caching, and mirroring responsibilities

can be seamlessly migrated to other cluster members. When the server is

back online, the SSD data LUNs, caching, and mirroring responsibilities can

optionally be migrated back.

Configuration

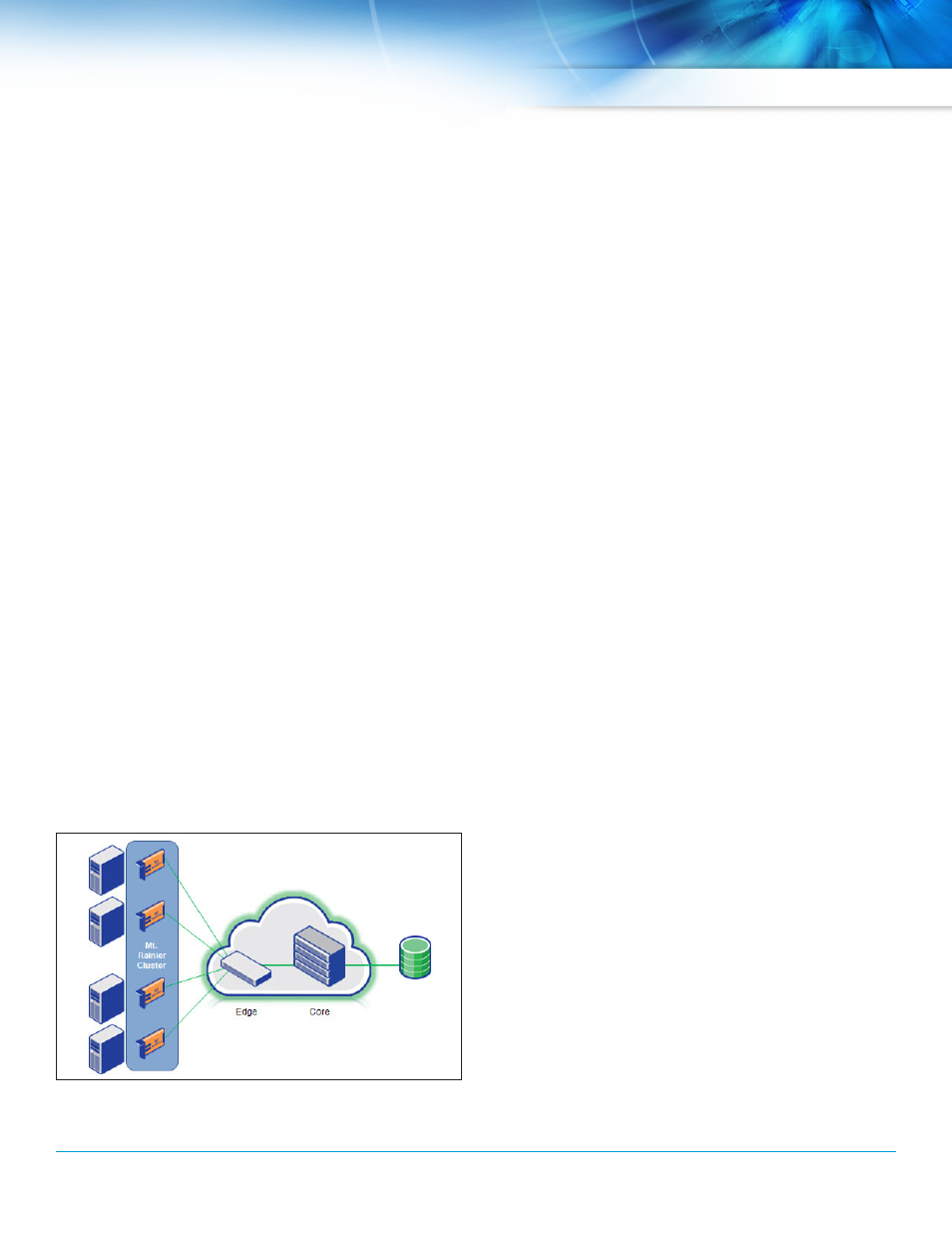

the only topological requirement for establishing a cache adapter cluster is

that all members of that cluster must be able to “see” each other over two

independent storage network fabrics, as illustrated in Figure 10.

Figure 10. Storage Acceleration Cluster with All Mt. Rainiers Attached

to the Same Edge Switch

For Fibre Channel SaNs, all cluster members must be zoned together

in each fabric to which they are attached. to optimize performance, the

recommended best practice is to configure clusters with all members

connected to the same pair of top-of-rack or end-of-row switches. this

topology is a good match for a rack- or row-oriented storage network

layout, providing both direct and indirect system performance benefits.

intra-cluster communication takes advantage of the availability of excess

bandwidth at the storage network edge to optimize storage accelerator

cluster performance. Much of the high-load storage i/O traffic is removed

from the network core, thus benefiting other, non-accelerated storage i/O

requirements.

Integration Tools

to support automation and integration with third-party tools, QLogic

provides full access to all cache configuration and policy options through

the host CLi, as well as an aPi.

host Command Line interface (hCLi)

any configuration, management, or data-collection task supported by

QConvergeConsole can also be accomplished with the hCLi and may

optionally be scripted. For example, if you are using write-back caching—

when working with network storage that supports LUN snapshots or

cloning—to create point-in-time images (for example, for creating

consistent backups), you must ensure that the write cache is flushed before

taking the snapshot or clone. to perform flushing, insert hCLi cache flush

commands in the snapshot or cloning pre-processing scripts. Similarly,

when mounting a snapshot or clone (for example, in a rollback operation) to

Mt. rainier-enabled servers, hCLi commands may be scripted to first flush

the cache (if write-back is enabled), and then invalidate the cache for the

affected LUNs before proceeding with the rollback volume mount.

the hCLi also provides advanced SSD data LUN and SaN LUN caching

commands that automate provisioning, decommissioning, and policy

changes. For instance, based on knowledge of application processing

cycles, you may need to modify write caching parameters. a LUN involved

in decision support nominally has low and “bursty” write activity, and

would benefit from a write-back caching policy. however, when such LUNs

are refreshed (for example, during periodic extract-transform-Load [etL]

activities), the profile changes to heavy write, and therefore write-back

should be disabled by inserting the hCLi cache policy commands into the

etL control scripting.

Mt. rainier aPi

third-party developers and end-users who write custom applications can

have direct access to all SSD data LUN and SaN LUN caching configuration,

management, and data collection facilities of Mt. rainier clusters by means

of the QLogic Mt. rainier aPi. in addition to the capabilities described for