White paper – QLogic 10000 Series Mt. Rainier Technology Accelerates the Enterprise User Manual

Page 2

SSG-WP12004C

SN0430914-00 rev. C 11/12

2

White PaPer

Introduction

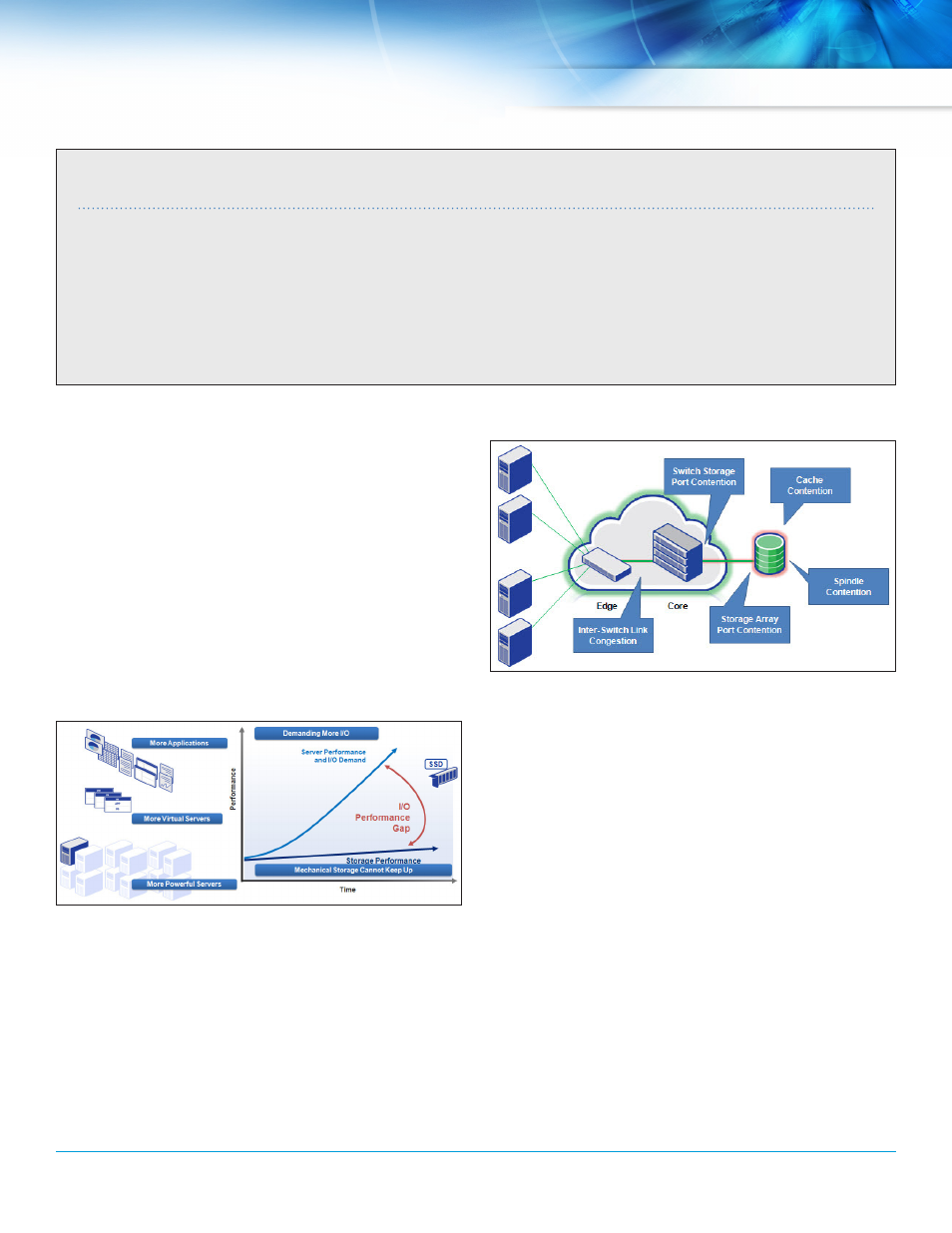

increased server performance, higher virtual machine density, advances in

network bandwidth, and more demanding business application workloads

create a critical i/O performance imbalance between servers, networks,

and storage subsystems. Storage i/O is the primary performance bottleneck

for most virtualized and data-intensive applications. While processor and

memory performance have grown in step with Moore’s Law (getting faster

and smaller), storage performance has lagged far behind, as shown in

Figure 1. this performance gap is further widened by the rapid growth in

data volumes that most organizations are experiencing today. For example,

iDC predicts that the amount of data volume in the digital universe will

increase by a factor of 44 over the next 10 years.

Figure 1. Growing Disparity Between CPU and Disk-Based Storage Performance

Following industry best practices, storage has been consolidated,

centralized, and located on storage networks (for example, Fibre Channel,

Fibre Channel over ethernet [FCoe], and iSCSi) to enhance efficiency,

compliance policies, and data protection. however, network storage design

introduces many new points where latency can be introduced. Latency

increases response times, reduces application access to information, and

decreases overall performance. Simply put, any port in a network that is

over-subscribed can become a point of congestion, as shown in Figure 2.

Figure 2. Sources of Latency on Storage Networks

as application workloads and virtual machine densities increase, so does

the pressure on these potential hotspots and the time required to access

critical application information. Slower storage response times result in

lower application performance, lost productivity, more frequent service

disruptions, reduced customer satisfaction, and, ultimately, a loss of

competitive advantage.

Over the past decade, it organizations and suppliers have employed

several approaches to address congested storage networks and avoid the

risks and costly consequences of reduced access to information and the

resulting underperforming applications.

The Traditional Approach: Refresh Storage Infrastructure

the traditional approach to meeting increased demands on enterprise

storage infrastructure is to periodically replace or “refresh” the storage

arrays with newer products. these infrastructure upgrades tend to focus on

higher-performance storage array controllers and disk drives that spread

data wider across a larger number of storage channels, increase the number

of array front- and back-end storage ports available, and increase network

bandwidth. implementing a well-designed infrastructure refresh delivers

With Mt. rainier technology, QLogic delivers a set of unique solutions

optimized to address the growing performance gap between what

the processor can compute and what the storage i/O subsystem

can deliver. With a very simple deployment model, this approach

seamlessly combines enterprise server i/O connectivity with shared,

server-based i/O caching. Mt. rainier delivers dramatic and— perhaps

most importantly—smoothly scalable application performance

improvements to the widest range of enterprise applications. in

combination with the QLogic Cache Optimization Protocol™ (QCOP),

these performance benefits are transparently extended to today’s most

demanding active-active clustered environments. this white paper

provides a high-level introduction to the QLogic Mt. rainier technology.

Executive Summary