Netperf throughput, Netperf latency bound case, Netperf throughput 3.4. netperf latency bound case – VMware vSphere Fault Tolerance 4 User Manual

Page 9

9

VMware white paper

3.3. Netperf Throughput

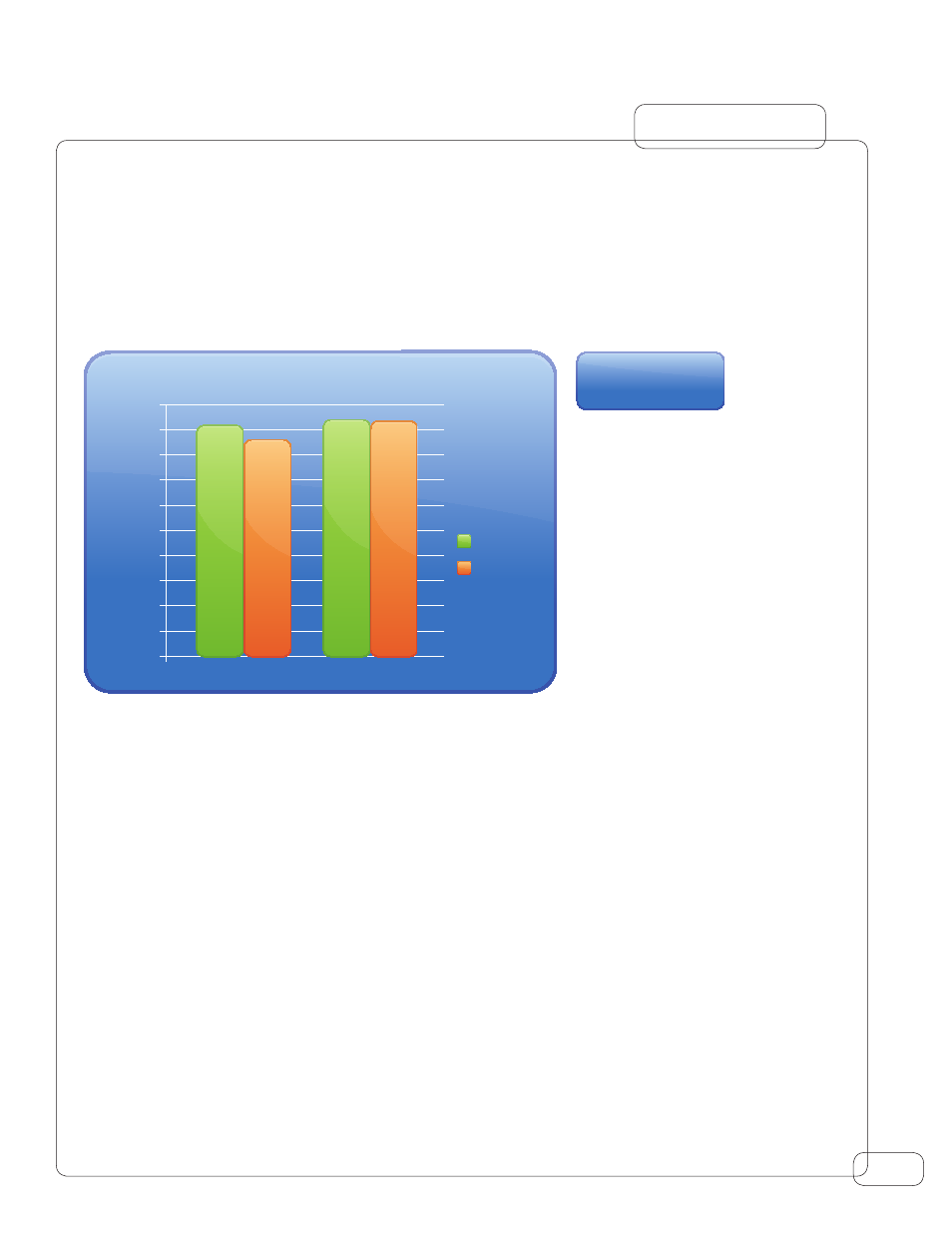

Netperf is a micro-benchmark that measures the throughput of sending and receiving network packets. In this experiment netperf

was configured so packets could be sent continuously without having to wait for acknowledgements. Since all the receive traffic

needs to be recorded and then transmitted to the secondary, netperf Rx represents a workload with significant FT logging traffic.

As shown inFigure 6, with FT enabled, the virtual machine received 890 Mbits/sec of traffic while generating and sending 950 Mbits/

sec of logging traffic to the secondary. Transmit traffic, on the other hand, produced relatively little FT logging traffic, mostly from

acknowledgement responses and transmit completion interrupt events.

Figure 6. Netperf Throughput

0

100

200

300

500

400

600

700

800

900

1000

FT Disabled

FT Enabled

Netperf throughput

Mbits/sec

Receives

Transmits

FT traffic Rx: 950 Mbits/sec

FT traffic Tx: 54 Mbits/sec

3.4. Netperf Latency Bound Case

In this experiment, netperf was configured to use the same message and socket size so that outstanding messages could only be

sent one at a time. Under this setup, the TCP/IP stack of the sender has to wait for an acknowledgment response from the receiver

before sending the next message and, thereby, any increase in latency results in a corresponding drop in network throughput.

Note in reality almost all applications send multiple messages without waiting for acknowledgement, so application throughput is

not impacted by any increase in network latency. However since this experiment was purposefully designed to test the worst-case

scenario, the throughput was made dependent on the network latency. There are not any known real world applications that would

exhibit this behavior. As discussed in

, when FT is enabled, the primary virtual machine delays the network transmit until

the secondary acknowledges that it has received all the events preceding the transmission of that packet. As expected, FT enabled

virtual machines had higher latencies which caused a corresponding drop in throughput.