Drs and vmotion, Timer interrupts, Fault tolerance logging bandwidth sizing guideline – VMware vSphere Fault Tolerance 4 User Manual

Page 7: Fault tolerance performance, Specjbb2005

7

VMware white paper

It is recommended that FT primary virtual machines be distributed across multiple hosts and, as a general rule of thumb, the number

of FT virtual machines be limited to four per host. In addition to avoiding the possibility of saturating the network link, it also reduces

the number of simultaneous live migrations required to create new secondary virtual machines in the event of a host failure.

2.8. DrS and VMotion

DRS takes into account the additional CPU and memory resources used by the secondary virtual machine in the cluster, but

DRS does not migrate FT enabled virtual machines to load balance the cluster. If either the primary or secondary dies, a new

secondary is spawned and is placed on the candidate host determined by HA. The candidate host determined by HA may not be an

optimal placement for balancing, however one can manually VMotion either the primary or the secondary virtual machines to a

different host as needed.

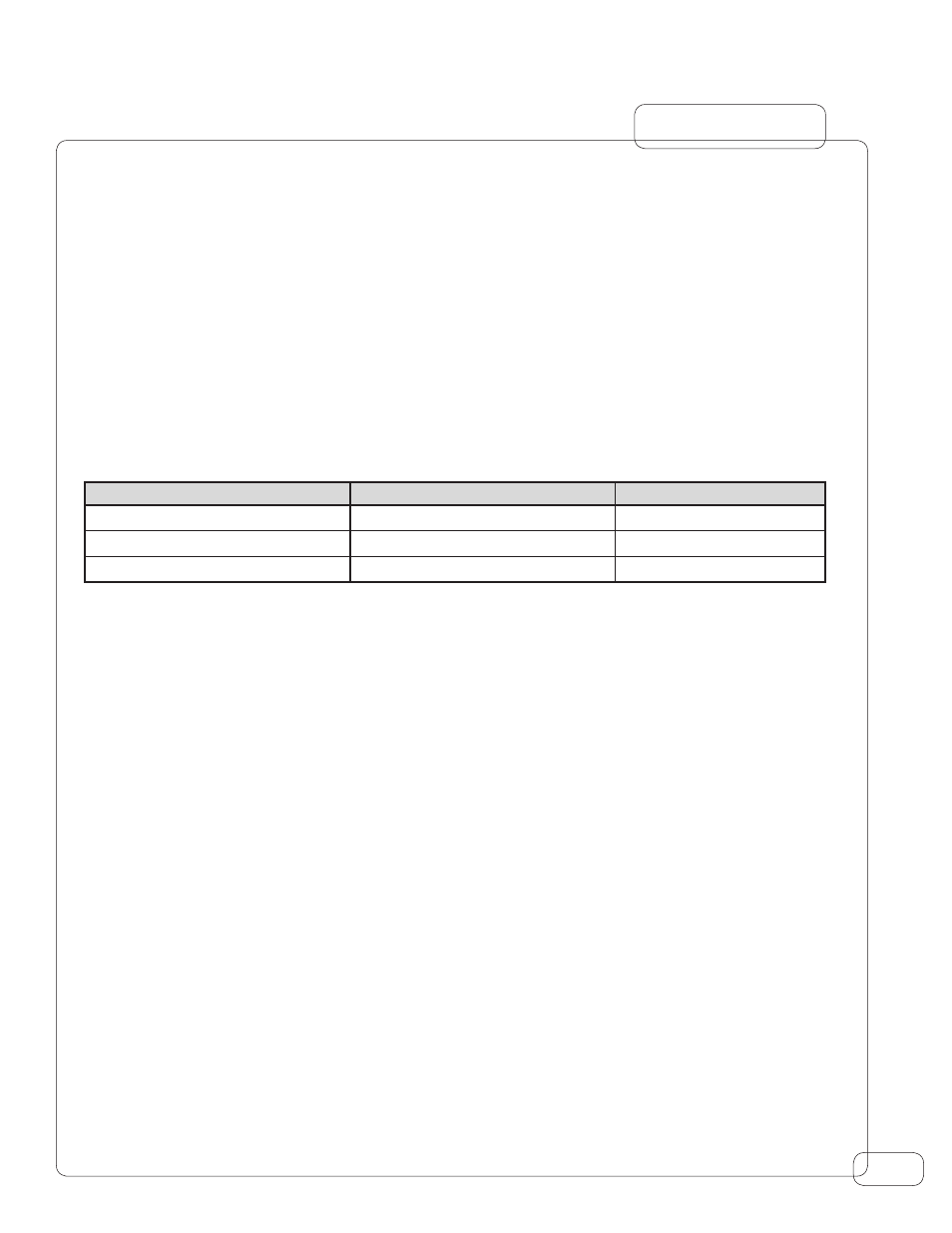

2.9. Timer Interrupts

Though timer interrupts do not significantly impact FT performance, all timer interrupt events must be recorded at the primary

and replayed at the secondary. This means that having a lower timer interrupt rate results in a lower volume of FT logging traffic.

The following table illustrates this.

Guest OS

Timer interrupt rate

Idle VM FT traffic

RHEL 5.0 64-bit

1000 Hz

1.43 Mbits/sec

SLES 10 SP2 32-bit

250 Hz

0.68 Mbits/sec

Windows 2003 Datacenter Edition

82 Hz

0.15 Mbits/sec

Where possible, lowering the timer interrupt rate is recommended. See KB article 1005802 for more information on how to reduce

timer interrupt rates for Linux guest operating systems.

2.10. Fault Tolerance Logging Bandwidth Sizing Guideline

As described in section 1.2, FT logging network traffic depends on the number of non-deterministic events and external inputs that

need to be recorded at the primary virtual machine. Since the majority of this traffic usually consists of incoming network packets

and disk reads, it is possible to estimate the amount of FT logging network bandwidth (in Mbits/sec) required for the virtual machine

using the following formula:

FT logging bandwidth ~= [ (Average disk read throughput in Mbytes/sec * 8) + Average network receives (Mbits/sec) ] * 1.2

In addition to the inputs to the virtual machine, this formula reserves 20 percent additional networking bandwidth for recording

non-deterministic CPU events and for the TCP/IP headers.

3. Fault Tolerance Performance

This section discusses the performance characteristics of Fault Tolerant virtual machines using a variety of micro-benchmarks and

real-life workloads. Micro-benchmarks were used to stress CPU, disk, and network subsystems individually by driving them to

saturation. Real life workloads, on the other hand, have been chosen to be representative of what most customers would run and

they have been configured to have a CPU utilization of 60 percent in steady state. Identical hardware test beds were used for all the

experiments, and the performance comparison was done by running the same workload on the same virtual machine with and

without FT enabled. The hardware and experimental setup details are provided in the Appendix. For each experiment, the traffic on

the FT logging NIC during the steady state portion of the workload is also provided as a reference.

3.1. SPeCjbb2005

SPECjbb2005 is an industry standard benchmark that measures Java application performance with particular stress on CPU and

memory. The workload is memory intensive and saturates the CPU but does little I/O. Because this workload saturates the CPU and

generates little logging traffic, its FT performance is dependent on how well the secondary can keep pace with the primary.